So You Want To Do Robots, Part 2: What do you need to invent?

About this series

I’ve been working on general purpose robots with Everyday Robots for 8 years, and was the engineering lead of the product/applications group until me and my team was impacted1 by the recent Alphabet layoffs. This series is an attempt to share almost a decade of lessons learned so you can get a head start making robots that live and work among us. Part 1 is here.

What are we even doing?

First let’s be clear on the goal. You want to make a robot that changes the world. It is going to be used in all kinds of places, free humanity from drudgery and scarcity and be super freaking cool. The key to making this happen is to make robots work in human spaces.

“I mean, I’d settle for building cool robots that never go to market as long as someone else is paying me…” - most roboticists

This is not a hot take. Other people have figured this out and tried (many of them with a lot of money to throw at the problem), so where are the robots?

Everyone before you has failed to discover the breakthroughs that let the robots really work.2 Your moonshot-hard-problem is to discover those breakthroughs. Across robotics, folks have lots of different ideas of what that technology will be but until someone makes the breakthrough you don’t know which one will work. You have to find out what that works by trying it.3 But we can get more specific about what problems it has to solve.

The Table of Update Rates

I have a big ass table for you to look at. It's going to be worth it, I promise. This is a table of the things that go into making robots work, organized by the time-scale (within an order of magnitude) they operate on.

Motor Control - 1000 Hz or more

Control current to the motors or control motor position or velocity.

Difficult, but well understood. This usually happens on motor driver boards thousands of times a second.

Real Time Trajectories - 100 Hz

Create smooth setpoint paths to feed to motor controllers.

Difficult, but fairly well understood. Often runs on a "real time compute" using a masochistic subset of C++ guaranteed to put hair on your chest.

Path Generation: The Dexterity Problem - 10 Hz - 1 Hz

Create a trajectory to solve a particular problem based on sensor input.

The kind of problems here are "figure out how to move the arm to <open a door/grasp a can/push a button/stack a bag>" or "How to drive through a crowded cafe".

These are completely open problems in robotics. Here be dragons. This area includes things like "how do we change our plan not to hit things but still do the thing", various flavors of Learning from Demonstration and Reinforcement Learning, Machine Learning Perception plugged into classical planning, model predictive control, hybrid approaches and Contextual Bandits, and other things people haven't tried yet. This is also the frequency that teleop operates in.

Classical robotics solves some non-contact subsets of these problems pretty well, but with contact this is Hard.

Sequencing & Task Planning - 1 Hz - 0.1 Hz (1 - 60 seconds)

Sequence actions to accomplish a task.

This includes things like "To sort a bottle you need to pick it up, move to the bin and drop it" and "To clean a cafe, drive to each table, wipe their surface from left to right and then drive away". Classical solutions to this problem include state machines and pre-condition/post-condition tree planners. Exciting new ML solutions include using large language models (eg SayCan). This is the frequency where remote human assistance (high level questions/answer interface instead of direct teleop) works.

In my experience, this part turns out to be pretty simple in practice and things like for-loops or queues combined with a few minutes coding sequences work pretty well.

Work Scheduling - minutes to hours

Deciding what task should be done when.

When should a robot stop cleaning a conference room and go prep the dining area? How many robots are needed to clean a cafe between lunch and dinner? Historically folks have solved these problems manually.

The (good?) news is that this problem only matters once you have more than a few dozen robots working doing more than 2-3 useful things. If you want to make a general purpose robotics startup, you want to get there eventually but first you gotta focus. There are literally hundreds of interesting problems you are going to need to solve and you don’t need to solve this one yet. Wait until you close your series C.

The Dexterity Problem: More important than the others.

Not to say that there aren't important improvements to be had at every level, but the dexterity problem is the important one. Your robots need to pick things up, push things, open things, scrub things and squeeze around people. If you don't solve the dexterity problem you can't make robots that work in human spaces.

Attention humans, it is your responsibility to dodge!

But if you do solve the dexterity problem, I bet you can use off-the-shelf-tech for the other layers and make an enormous robot business. It's possible that you also need a breakthrough in sequencing & task planning, but my gut is that for the first 10 million robots, the tasks they do will be structured enough that simple techniques will suffice. Either way, solving sequencing for arbitrary tasks in a general way doesn't mean squat if you can't execute the steps, which are each going to be a dexterity problem.

So, your moonshot-hard-task is to invent the technology that lets us make robots work, and that means technology that lets us solve the Dexterity Problem.

Bummer

There are two reasons this is a bit of a bummer. The first is that you want your startup to be surfing the crest of a trending wave, and it's hard to think of a bigger trending wave in 2023 than large language models.4 And the large language models seem like an amazing tool to solve… the sequencing problem.

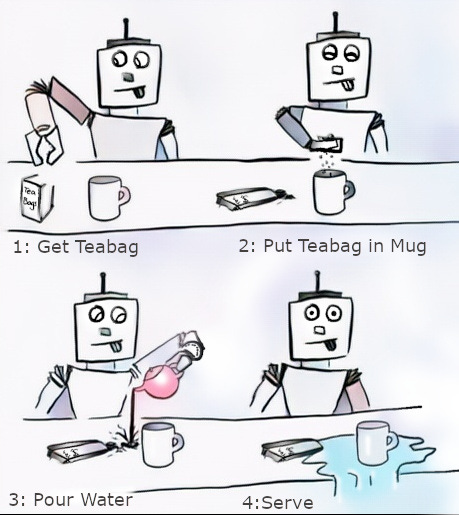

Hey RoboGPT, What are the steps to make tea?

I could be wrong, and as I’ll talk about in the ML episode,5 you can go really far if you can twist your problem until it looks like an ML problem that someone else has solved really well,6 so maybe there is some really slick way of turning dexterity problems into text completion. Those of you reading this in the distant future can let me know.

The second reason this is a bit of a bummer is that in your startup you generally want to fake-it-till-you-make-it7 by doing things that don’t scale. The traditional way to do that with robots is to fake your AI by having your robots call humans whenever they don’t know what to do. Unfortunately this flavor of phone-a-friend8 is easiest to implement if you need discrete decisions like “Should I go left or right?” or “Is this crosswalk safe to cross” or “Did my gripper break off just now or what?”. This sort of question and answer also lends itself well to… the sequencing problem.

You can, of course, teleop (control robots directly over the internet) to solve dexterity problems but it's much much harder to do well. In general, driving a robot hand around in 6 dimensions (movement and rotation) on a 2D screen is hard, as is dealing with time-delay and giving the human useful feedback about forces. Most times when you see videos of folks doing well teleoping a robot, they are in the same room using their human eyeballs to tell what's going on, which is obviously less useful as a crutch for your startup. I do think that there is a potential here with a 3rd person puppeteer interface in VR, but that's a much bigger project than a website that asks, “is this a stop-sign?”

Good News: Perception works now(!)

Happily, it's not all bad news. The biggest change I’ve seen in the past decade of doing robots is that ML for vision went from kinda working some of the time to actually just working. In 2017 I described my job as “making the robots work even though perception is wrong about stuff”. In 2023, I don’t do that anymore. You can overfit an off-the-shelf architecture with an afternoon data collection and get near pixel perfect 99% precision segmentation of the stuff you care about, if you limit the objects and backgrounds.

I don’t think everyone has fully realized the impact of this and I bet there are a bunch of dexterity problems (especially ones that don’t have fiddly force feedback) that are possible now just by plugging the output of perception into classical motion planning.

Probably Your Secret Sauce

Because you are limiting your ambition,9 you don’t need to solve every dexterity problem: just the ones you need for your first tasks. But your dexterity problem is your biggest tech risk for your startup, so derisk early and be ready to pivot if you find a solution to a different problem. (As long as it's something that someone wants to pay for). And if you like thinking about how your startup will fail,10 you should subscribe for part 3, where we’ll talk about dying in Pilot Purgatory.

Made redundant, sent home, ejected, canned, made Ex-Google-Xers.

Obviously industrial robots work, but as the environment gets less structured (custom designed around the robot), a robot’s ability to do stuff quickly approaches “unable to even”.

Don’t worry, though, that won’t stop other robotics folks (including me) from telling you what they think, any chance they get.

i.e. ChatGPT and, like, there are probably other ones?

ML Essay? ML Part? ML Post? I donno. Nouns are hard. Anyway, you should probably subscribe so you don’t miss out on any of that great upcoming connnntennnnttttt

My favorite example of this is the folks that used spectrograms to turn music into images and back and fine-tuned an image-generation network to be a music generation network. It's brilliant and has no right to work as well as it does.

Unless you are doing medical stuff on real humans. Please don’t fake that. Kthxbai.

Also called ‘human-in-the-loop’ or ‘wizard-of-oz’

I mean, who doesn’t love laying awake in the middle of the night contemplating failure, am I right?

Currently in grad school for robotics, this article is greatly appreciated as it helped me understand practical research domains.

" I do think that there is a potential here with a 3rd person puppeteer interface in VR"

I didnt think anyone would ever bring up Baxter's Homunculus again. It rises from the grave!