ML for Robots, Specialization vs Overfitting

So You Want To Do Robots: Part 5

About this series

I’ve been working on general purpose robots with Everyday Robots for 8 years, and was the engineering lead of the product/applications group until me and my team was impacted1 by the recent Alphabet layoffs. This series is an attempt to share almost a decade of lessons learned so you can get a head start making robots that live and work among us. Part 1 is here.

Training networks specializes them

A lot of folks want to add ML (machine learning) to make their robots more general purpose, but the truth is that ML tends to make things less general purpose and more specialized. This is because it looks like ML is learning to generalize concepts, but most of the time it's actually learning to interpolate between things it's been trained on.

Real Quick: Interpolation vs Extrapolation

This is gonna be important so I want to make sure we’re all on the same page. Interpolation is predicting in between data points you know. Neural Networks are good at interpolation.

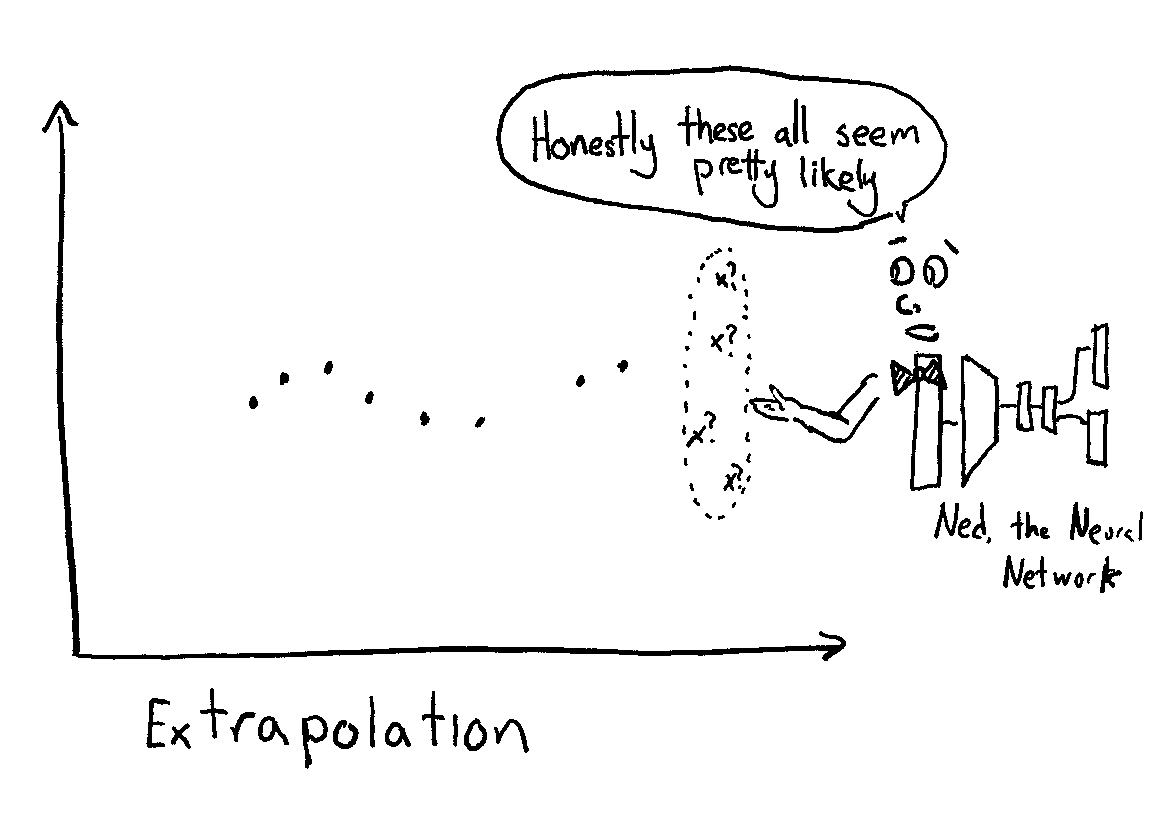

Extrapolation is predicting things outside the area you have data. Neural Networks are crap at extrapolation.

Where were we? Oh yeah, specialization

Say you want a robot to pick up empty bottles and cups off people’s desks so you take a bunch of training data (photos) taken of people’s desks at your office. If you successfully specialize your network, you can interpolate over the whole area.

Specialized

Dots are training data, red is the area where things work. You have "learned" this domain. If you add another desk similar to the ones in the training data, odds are your neural network will predict good things for that one too, because it probably falls in this clump.

Specialized to your problem is different from overfit

If you fit too complicated a model to too few data points you overfit your data and can no longer interpolate between them. Your model has fit just the training data at the cost of regions between them. Overfitting (bad) is different from specialization (good).

Overfit: Model only works for the training examples

Trying to generalize to a new domain

If you wanted to clean up cups, cans and bottles from a Chipotle restaurant (a new domain), you could try to use your model trained on your desks and it might work or it might not. It depends on if the domain of Chipotle tables falls within the imaginary clump of desks in the high dimensional mapping that your model has chosen. This is basically impossible to know without trying and is impossible to control. When people say that neural networks don't extrapolate they don't mean that they can't work on new domains, just that they only work in new domains sometimes, if you get really lucky. (Training the model from different random seeds could result in you getting lucky or unlucky. There is no way to know ahead of time).

Getting lucky in high dimensional space. Interpolation works: gets you (mostly) what you want!

Getting unlucky in high dimensional space. Need Extrapolation: Unlikely to work

If you want to work well at Chipotle and you can collect data there, you are probably better off dropping the requirement of working well at desks. (Although you can probably use your desk model as a starting point to make specializing on the next domain easier and, if you don't have enough Chipotle data, maybe your desk data can help prevent overfitting to your sparse Chipotle training data.)

You can increase your odds of getting lucky by having huge spanning training domains, so that new domains are likely contained inside your space:

Here the new domain falls in a space that you can interpolate to. Likely to work (Yay!)

But you can still get unlucky and have the new domain fall outside. And humans are basically completely incapable of reasoning about where in 256 (or higher) dimensional space these domains sit relative to each other in the particular embodiment of the neural network (and the arrangement can change at each training).2

New domain falls outside range. Who knew?

Also trying to span lots of domains often requires a lot more data (keeping the 20th class separated from the other 19 is much harder than keeping the 3rd class separated from the first two. This is a hockey stick, but the bad kind, where each incremental step forward requires more data than the previous.) And the way to count data is not the raw number of samples but the count of interesting, diverse samples. And diverse samples are even more expensive to get.

40,000 photos of the same three desks (not diverse)

40,000 photos: one photo of every Google Employee's desk (very diverse)3

Spanning domains to unlock new domains because they fall inside the range of things you have done is a powerful thing. That kind of strategy works when you have mountains and mountains of data. This is how things like ChatGPT work: they have trained on, like, literally the entire internet, and so most questions you ask end up being interpolations between things in the training set. This kind of data set just doesn’t exist for robotics, so unless you can figure out how to use someone else’s data and make it work for your robots you’re going to be stuck specializing to each domain you want to work in.

This post has been very high level and abstract. In the next section I’ll dive into the various philosophies on learning for robots, some concrete examples, and I’ll sling some hot takes on how to avoid the pitfalls we fell into.

You can read the next part here!

Thanks to Michael Quinlan and Mrinal Kalakris for reading drafts of this.

But I’ve had more time for blogging, so I got that going for me, which is nice.

Also, lucking out and ending up “between” things gets much much less likely in high dimensional space in a way that normal human brains like mine have a hard time visualizing.

Though Google security might have something to say about your scrappy data collection plan.