Writing Libraries for AIs to Use

I’ve been doing two unrelated things. The first is that I’ve been working on posetree.py, a library for doing pose math for robots. The second is that I’ve been working on a hobby video game (which you should play with soon before the server cost forces me to take it down). For both projects I’ve made heavy use of LLMs1 to code and holy moly it is a productivity multiplier.

And given how much I’ve begun relying on it in such a short time, I can’t imagine a future where most programmers don’t make very heavy use of AI assistance. Donald Knuth tells us, “Programs are meant to be read by humans and only incidentally for computers to execute.” but in the future we have a third category. I think that programs, libraries and programming languages that lend themselves to effective AI assistance will win over abstractions that LLMs are bad at.

So what does that look like? I don’t know,2 but I still wrote a whole post about me trying to find out.

My experience using AIs for coding

I’ve been using ChatGPT 43 and Github Copilot. ChatGPT feels like pair programming with a pretty junior engineer who doesn’t think that far ahead, but has memorized the documentation and types really fast. If you know what you want, but not exactly how to do it, ChatGPT is great.4 The first day of creating a game in javascript (a language I don’t know very well) it was easily a 10x productivity boost. I had an editable map, characters jumping around the scene and collecting gems and a working UI in about an hour. The productivity gain slows down because over time you realize that copy-and-pasting an isolated snippet for every problem you have doesn’t lead to great design. I found I still had to do some real engineering and refactoring to keep it from collapsing under its own weight.5 But it's still easily a 2-3x steady state on a domain I’m not a super expert in.

GPT also struggles with things that are legitimately tricky. It had a hard time getting the physics and collision code to produce nice gameplay, and I tried for a while describing the new undesirable behavior to it and trying its fixes a while before giving up, using my own brain, and implementing collision physics myself. But the ways that it failed reminded me of the kinds of tricky bugs I’ve made trying to get collisions to feel nice in previous game projects.

GitHub Copilot really shone on the posetree project. That one is a lot more mathy and there are a bunch of matchable-pattern stuff. I’d type

@property

def x_axis(

And it would fill in the (mostly) correct docstring and implementation to get the x-axis of the pose (nice) and then suggest that I probably want y-axis and z-axis. (I do, indeed. Thanks, AI). It has more context about your file so it has less of a tendency to forget the names of things, and often notices things you ought to have but don’t. (Yes, I also want a ‘frame’ property on Pose. Thanks again). But you can’t describe a problem and have solutions the way you can with GPT.6

A master plan to show how smart I am

I’m proud that code written with posetree tends to look more like natural language, which I think makes it easier to read and debug. I’d love to prove that it helps, and, because I’m an engineer, I’d love to quantify it, but that has seemed impossible.

However LLMs work in natural language, so maybe (I thought) I can create a set of problems of increasing complexity and trickiness and have ChatGPT solve them with and without my library. Maybe I can even have some number of instances do it and count how many correctly solve each problem and this will create a quantifiable measure of how much better my library is.

Results?

And so I tried that, and failed utterly.

But failing is interesting, so here’s what I learned.

Methodology

I started two GPT instances7 and gave them these prompts:

They both got:

“I'd like you to write some code for a mobile robot. The system is made up of the frames 'map', 'robot', 'tool', and 'camera'. 'robot' represents the robot base and has x pointing forward and z pointing up. 'map' origin is in the corner of the building, with z pointing up. 'camera' has z pointing out, and y pointing down. 'tool' has z pointing out of the gripper."

Then they got a custom second half:

GPTControl:

You have the API get_transform(parent, child) which returns a 4x4 rigid body transform matrix as a np.array. For example, to get the robot's pose in map frame you can call get_transform('map', 'robot').

GPTTest:

<paste the documentation for the pose API>

You can assume that there is a PoseTree instance called pose_tree. For example, to get the robot's pose in map frame you can call Pose(Transform.identity(), 'robot', pose_tree).in_frame("map")8.

Then I started asking them both a series of pose problems, drawn from things I’ve actually had to do with robots, starting easy and getting harder. I didn’t stick to that very long though, because of the way things started going off the rails.

AIs like to pattern match.

The first problem is that ChatGPT has seen lots and lots of ‘create a 4x4 matrix transform, do some multiplication with it, and use numpy to scrape out your answer’ code in its training data. But posetree library is brand-spankin-new so all of its patterns are outside the training data. So when I asked for code to solve pose-problems it successfully used my library to write old-school transforms code.9

For example I asked, “implement distance_from_tool_to_camera() which returns the cartesian distance between the tool and the camera.” and got:

Which is… fine. But tool_pose.distance_to(camera_pose) is right there. As is np.linalg.norm(tool_pose.in_frame(‘camera’).position). The power of posetree is that you don’t have to convert everything to the same frame and then do linear algebra on it.

You’re doing it wrong!

I asked a few more questions before getting frustrated at it for using my library so badly. I couldn’t help myself. I wrote answers to each question myself using ‘canonical’ posetree abstractions and gave it those and I was rewarded this this gem:

“These improved implementations make excellent use of the posetree API methods, and they are more concise and readable compared to previous versions. They also handle frames properly, ensuring that operations are performed relative to the correct frame of reference.”

Thanks, GPT. This is why AIs are better than co-workers.

Ego assuaged, we soldiered on.

Examples are better than documentation

LLMs are fundamentally pattern matching machines and going from documentation to implementation was hard for it, but extrapolating from examples was easier. After seeing the solutions to the first three problems it did much better with the next two. However, the ControlGPT also did well on those questions and didn’t need me to give it extra examples.

If I cheat enough and wait enough I get the result I want!

Eventually I hit on a problem that my library + example-hinted GPT solved correctly and my no-library GPT failed on.

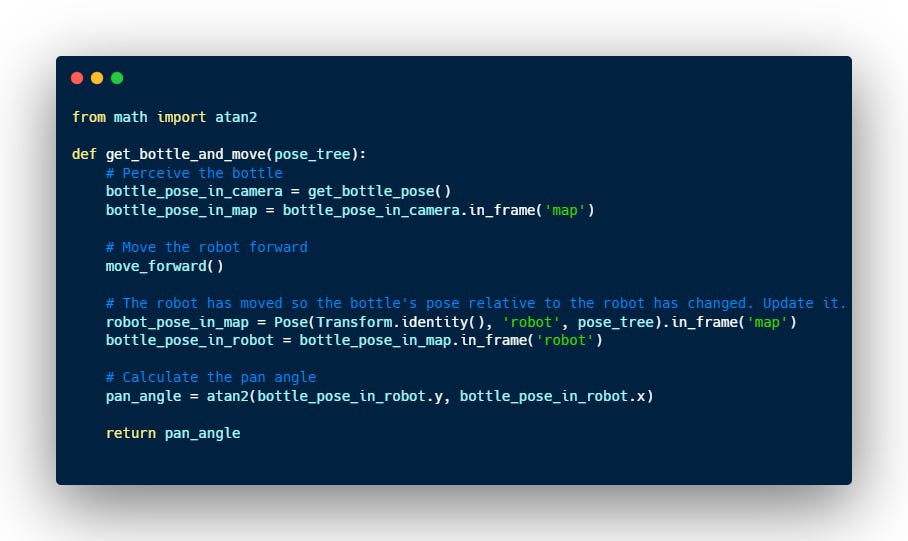

Next question: You have get_bottle_pose() which returns the bottle pose in the camera frame, and move_forward() which drives the robot some (unknown) amount forward. Once the robot moves, it will no longer be able to see the bottle. Please write a method to perceive the bottle, move the robot and then return the pan angle needed to point the camera at the bottle. (0 pan has the camera pointing in the robot x-axis, positive pan is positive rotation about robot-z).

TestGTP:

It creates robot_pose_in_map that is doesn’t need (and never uses), and ignores a perfectly good angle_about_z_to() method, but not bad.10

ControlGPT:

I mean he’s got the spirit, but boy is he confused. camera_t_robot doesn’t change when the robot moves, so robot_t_camera_after is the same as robot_t_camera. That means the expression

np.dot(robot_t_camera_after, np.linalg.inv(np.dot(get_transform('robot', 'camera'), camera_t_bottle)))

is

robot_t_camera * (robot_t_camera * camera_t_bottle).inverse

robot_t_camera * (robot_t_bottle).inverse

robot_t_camera * bottle_t_robot

And we’re making one of the classic blunders of matrix multiply pose math: We’ve got our matrices in the wrong order. That multiplication is easy to compute but gives a nonsensical transform (the words next to each other have to match, and camera != bottle) and we get a wrong answer.

But I’ve had my thumb on the scale and have kept going until I got the result that I wanted, so you really can’t call this any kind of proof that posetree is easier for LLMs to use.

Maybe I only give examples?

Since it started working so much better after I gave it some examples, I wondered if giving it the documentation had been a blunder. I know I often learn how to use a library by looking at the unit tests, and what is a unit test but a giant pile of examples waiting to be fed in?11 So I fired up another instance12 and pasted in the unit test. And that went even more poorly. I don’t know why. Hilariously, after pasting in hundreds of lines of posetree unit test, when I asked it to solve a pose problem its first response was to use ROS’s tf2 library.13

When I told it I wanted to use the posetree library, it tried but got… every? API call wrong?! I’m including it because it’s kind of impressive.

Like, literally every line is wrong. get_pose takes a position, quaternion and a defining frame, Pose’s constructor takes a transform and a frame (are those 6 numbers position and euler angles concatenated together? gross) and posetree.transform() does not exist and there is no analogous method it is thinking of. Wow.

A Last Minute Contender

While reading my draft of this my friend and actual ML expert, Michael Quinlan, asked why I hadn’t tried GPT’s code-interpreter mode.

“What’s that?” I asked.

“Oh, it's the thing where you upload your source files and ask it to use them to write code.”

So I zipped up my files, uploaded them and asked the first question and… it bombed it. More getting all the APIs wrong, plus it multiplied a pose by a transform (and the whole point of posetree is to not do that.) Total failure. Bummed, I tried one last thing. I said, “You are misusing the library. Can you read the README and try again?”

And, holy shit, it nailed it. It got all of the questions right with idiomatic usage of the library. It even got a really tricky question about generating a circle of pregrasp poses which both other instances failed so badly I just gave up and moved on.

The only one it got wrong was the final question about looking at the bottle after the robot moved. (It stored the pose in robot frame so after the robot moved it still looked at where the bottle used to be relative to the robot.) Curious I asked, “This is incorrect. Can you figure out why?” and got:

The issue arises from the fact that the robot's perception of the bottle's pose is outdated once the robot moves. The perceived bottle pose is in the camera's frame of reference before the robot moves. After the robot moves, this pose information is no longer valid because the camera (and therefore its frame of reference) has moved along with the robot.

The correct approach should be:

Perceive the bottle and get its pose in the camera frame.

Convert this pose to a frame that does not move with the robot, such as the 'map' frame.

Move the robot forward.

Now, convert the stored bottle pose from the 'map' frame back to the 'robot' frame.

Calculate the pan angle based on this updated bottle pose in the robot's frame.

And followed up with a correct implementation.

Conclusion?

So here we are, at the end of my journey. Right now, in 2023, it seems that if you want LLMs to be really good at your library you should have it be really popular before 2021 so that there are a ton of examples from stack overflow in its training data. If you can’t pull that off, using the code-interpreter mode, and writing good documentation works pretty darn well, though it’s pretty funny that you have to tell your AI to RTFM.

I would love to see more support for LoRAs (or something like them) on the scale of Stable Diffusion in terms of ease of use. A LoRA is a small, easy to train “mixin” network. In image generation it lets the AI draw a particular person, or style or even abstract property like “extra detailed”. For Stable Diffusion there are websites where you can browse LoRAs (with example images), copy the link and put it in the UI and it will download it, and start using it. Ease of use matters for adoption. If I could create curated examples/documentation, and release a mixin for a coding LLM that made it really good at writing code using my library, (and I thought that people would use it), I absolutely would.14 Someone should get on that. I can imagine a product where you pick your technology stack, add in all those mixins and spin up your pair-programming ai. That would be sweet.

It does seem like, for the most part, things that are tricky for humans are tricky for LLMs (matrix multiply order, timing on collisions before/after applying the timestep, remembering what you called that variable you just created, like, 30 seconds ago.) So for now I’m just going to continue trying to write software that is easy for humans to read and understand and hope for the best. But if you know about great ways to teach an LLM new tricks, let me know in the comments!

You can read the whole chats here:

Test: https://chat.openai.com/share/6bbdce27-9cd7-43dc-b9ae-4a9c93f82884

Control: https://chat.openai.com/share/c9953f39-5589-4430-8075-98defbdfa0da

Unit Test Input: https://chat.openai.com/share/0ff27691-dfc8-4969-b8f4-221848c8aea0

Code-interpreter with upload: https://chat.openai.com/share/21d0fd67-9e07-4123-b43e-6a2f8acf0edb

Thanks to Mrinal Kalakrishnan and Michael Quinlan for reading an early draft of this.

Large Language Models, in case you don’t live on Hacker News.

You’ve been warned.

GPT-3.5 makes so many subtle bugs that it doesn’t seem worth it for coding tasks. All the time you save writing you spend debugging.

It is also kind of like if every time you searched on stackoverflow you found a post with an example doing exactly the thing you wanted.

Not too much refactoring though, because this is a toy project with a limited life and I’d rather do new fun things with it and throw it all away later than use good engineering practices. I’m on vacation. #YOLO

GPT is inherently a Q&A interface which lets it suggest features that span html, backend and js, which a tab-complete affordance can’t give you. Although I have found myself writing comments in my code just for copilot like “// iterate over the largest elements in myarray” and then deleting them when it spits out the code I wanted. Also, after reading this footnote, Michael Quinlan informed me that there is a copilot chat that I can install, so I’m going to try that right away.

Or, as normal humans call them, tabs in my browser.

In the first version of my test I didn’t include the snippet of how to get a pose and it couldn't figure it out, so I added it to both versions.

It kind of reminds me of people who write a lot of embedded C using Python for the first time. They are using Python but writing code that looks like C not using any of the more powerful abstractions.

I’ve also noticed that GPT tends to be very verbose. I think it has a hard time getting lots of thought into few tokens so OpenAI prompts it to do many smaller steps, like creating intermediate variables.

Ironically most of my unit tests were written by ChatGPT. It's really good at “Here’s a method, write me some tests”. If the test fails you still have to dig in to decide if the test is wrong or the method, but I have to do that with my own tests too, so “human level performance”?

tab

More human-like performance, I suppose. The familiar has a ton of inertia with humans and, I guess, AIs.

The other barrier is that LLaMA is the most open LLM and therefore has the best tooling for LoRAs, but everything I’ve read claims that it is “just as good as GPT-3.5” which I’ve found to be just below the threshold of good enough. My info could be old, though, the landscape changes almost daily.

Cool post! Really interesting idea of being able to package your library with a pair programmer for it. Definitely seems like something that will happen relatively quickly

Very interesting post! I have been using ChatGPT a lot for coding, only on the 3.5 one really. It has been very helpful for my use cases. I wonder how much worse 3.5 would have performed than 4 for you. I also have not tried the Code Interpreter yet.

Perhaps an option would be to get GPT to write out high level english pseudocode, then have another pass convert the pseudocode to your library, maybe with a prompt that just contains all the library function definitions to remind it.